Machine perception refers to the ability of machines, particularly computers and artificial intelligence systems, to interpret and understand data from the world around them using sensors, cameras, microphones, and other input devices. It encompasses a wide range of techniques and technologies aimed at enabling machines to perceive, understand, and interact with their environment in a manner similar to humans.

At its core, machine perception involves processing and analyzing sensory data to extract meaningful information, make sense of the surrounding environment, and take appropriate actions based on the perceived inputs. This process often involves multiple stages, including data acquisition, preprocessing, feature extraction, pattern recognition, and decision making.

One of the fundamental challenges in machine perception is dealing with the inherent complexity and variability of real-world data. Sensory data captured by cameras, microphones, or other sensors can be noisy, incomplete, or ambiguous, making it difficult for machines to accurately interpret and understand. To address this challenge, researchers and engineers employ various techniques from fields such as computer vision, signal processing, and machine learning.

Computer vision is a subfield of machine perception that focuses on enabling computers to extract information from digital images or videos. It involves developing algorithms and techniques for tasks such as object detection, recognition, segmentation, tracking, and scene understanding. Computer vision plays a crucial role in applications ranging from autonomous vehicles and robotics to medical imaging and surveillance systems.

Another important aspect of machine perception is natural language processing (NLP), which enables machines to understand and interpret human language. NLP techniques involve parsing and analyzing text data to extract meaning, identify entities, and infer relationships between words and phrases. Applications of NLP include language translation, sentiment analysis, information retrieval, and chatbots.

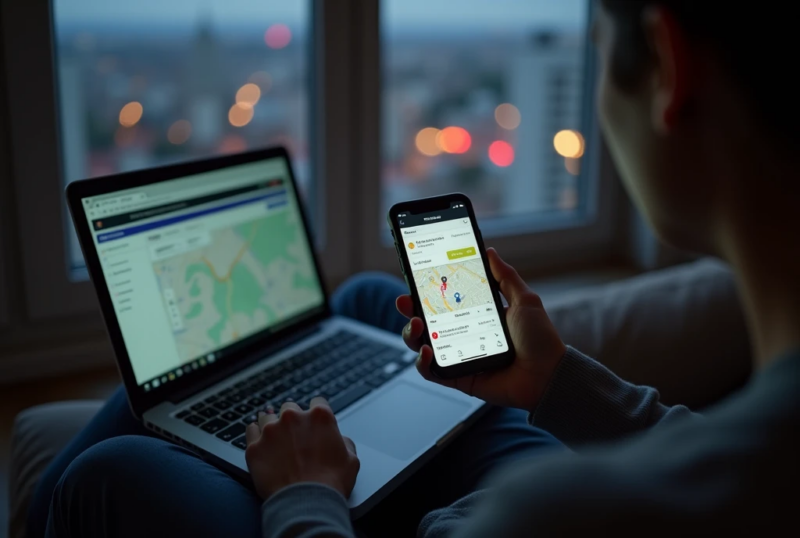

In addition to visual and auditory perception, machines can also perceive and interpret data from other sensors, such as accelerometers, gyroscopes, and GPS receivers. These sensors provide information about motion, orientation, and spatial position, enabling machines to navigate their surroundings and interact with physical objects.

Machine perception is closely related to the field of sensor fusion, which involves combining data from multiple sensors to obtain a more comprehensive understanding of the environment. Sensor fusion techniques aim to overcome the limitations of individual sensors by integrating information from different modalities, such as vision, audio, and inertial sensors, to improve the overall perception and decision-making capabilities of machines.

One of the key enabling technologies for machine perception is machine learning, particularly deep learning, which has revolutionized the field in recent years. Deep learning algorithms, inspired by the structure and function of the human brain, are capable of automatically learning hierarchical representations of data from raw sensory inputs. This ability makes deep learning particularly well-suited for tasks such as image recognition, speech recognition, and natural language understanding. Additionally, the integration of Pro Sound Effects for machine learning can enhance the auditory perception capabilities of AI systems, enabling them to better understand and interact with audio data in various applications such as speech recognition and environmental sound analysis.

Convolutional neural networks (CNNs) are a type of deep learning architecture commonly used in computer vision tasks. CNNs consist of multiple layers of interconnected nodes, each performing convolutional operations to extract features from input data. By training CNNs on large datasets of labeled images, researchers can teach them to recognize objects, scenes, and patterns with remarkable accuracy.

Recurrent neural networks (RNNs) and transformers are other types of deep learning architectures commonly used in natural language processing tasks. RNNs are particularly well-suited for sequential data processing tasks, such as speech recognition and language modeling, while transformers excel at capturing long-range dependencies in text data, making them suitable for tasks such as machine translation and document summarization.

Despite the significant progress made in recent years, machine perception still faces several challenges and limitations. One of the main challenges is achieving robustness and reliability in real-world environments, where conditions can be unpredictable and dynamic. Machines must be able to adapt to changes in lighting conditions, weather, background clutter, and other factors that can affect the quality and reliability of sensory data.

Another challenge is ensuring the privacy and security of perceptual systems, particularly in applications such as surveillance and facial recognition. There are concerns about the potential misuse of machine perception technologies for mass surveillance, profiling, and discrimination, leading to calls for the development of ethical guidelines and regulations to govern their use.

Furthermore, there are ongoing research efforts to develop more efficient and energy-efficient algorithms for machine perception, particularly for edge computing and mobile devices with limited computational resources. These efforts involve optimizing algorithms for performance, reducing memory and power consumption, and developing specialized hardware accelerators for perceptual tasks.

Machine perception encompasses a wide range of techniques and technologies aimed at enabling machines to interpret and understand data from the world around them. It plays a crucial role in enabling machines to perceive, reason, and interact with their environment in a manner similar to humans. Despite the challenges and limitations, machine perception holds great promise for revolutionizing various applications, from autonomous vehicles and robotics to healthcare and smart cities.